1. Configuring the GemStone Server

This chapter tells you how to configure the GemStone server processes, repository, transaction logs, and shared page cache to meet the needs of a specific application. It includes the following topics:

This chapter describes configuring the GemStone server; for information about configuring session processes for clients, refer to Chapter 2.

1.1 Configuration Overview

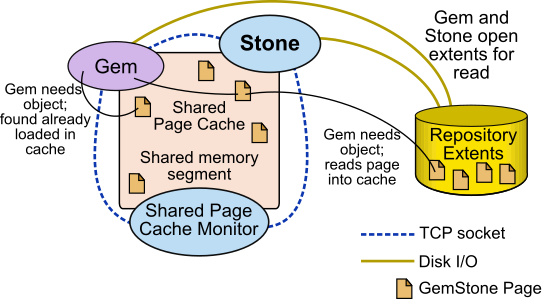

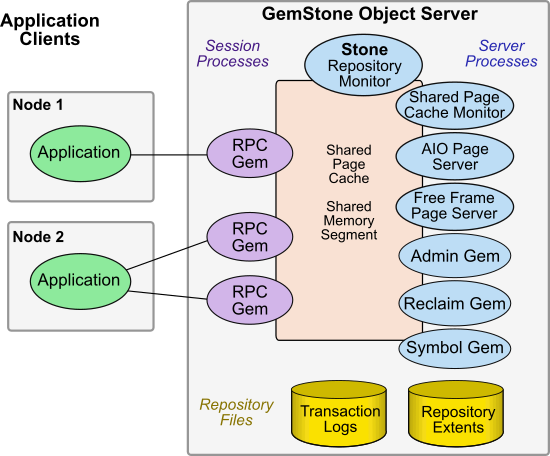

Figure 1.1 shows the basic GemStone/S 64 Bit architecture. The object server can be thought of as having two active parts. The server processes consist of the Stone repository monitor and a set of subordinate processes. These processes provide resources to individual Gem session processes, which are servers to application clients.

The elements shown in Figure 1.1 can be distributed across multiple nodes to meet your application’s needs. For information about establishing distributed servers, refer to Chapter 3.

Figure 1.1 The GemStone Object Server

Note the key parts that define the server configuration:

- The Stone repository monitor process acts as a resource coordinator. It synchronizes critical repository activities and ensures repository consistency.

- The shared page cache monitor creates and maintains a shared page cache for the GemStone server. The monitor balances page allocation among processes, ensuring that a few users or large objects do not monopolize the cache. The size of the shared page cache is configurable and should be scaled to match the size of the repository and the number of concurrent sessions; a larger size almost always provides better performance.

- The AIO page server performs asynchronous I/O for the Stone repository monitor, in particular to update the extents periodically based on changes in the shared page cache.

- The Free Frame Page Server is dedicated to the task of adding free frames to the free frame list, from which a Gem can take as needed.

- The Admin Gem performs the administrative garbage collection tasks.

- The Reclaim Gem performs page reclaim operations on both shadow objects and dead objects.

- The Symbol Gem is a Gem server background process that is responsible for creating all new Symbols, based on session requests that are managed by the Stone.

- Objects are stored on the disk in one or more extents, which can be files in the file system, data in raw partitions, or a mixture.

- Transaction logs permit recovery of committed data if a system crash occurs, and in full logging mode allows transaction logs to be used with GemStone backups for full recovery of committed transactions in the event of media failure.

The Server Configuration File

At start-up time, GemStone reads a system-wide configuration file. By default this file is $GEMSTONE/data/system.conf, where GEMSTONE is an environment variable that points to the directory in which the GemStone software is installed.

Appendix A, “GemStone Configuration Options”, tells how to specify an alternate configuration file and how to use supplementary files to adjust the system-wide configuration for a specific GemStone executable. The appendix also describes each of the configuration options.

Here is a brief summary of important facts about the configuration file:

- Lines that begin with # are comments. Settings supplied as comments are the same as the default values.

- Parameters that begin with “GEM_” are read only by Gem session processes at the time they start; ones that being with “STN” are read only by the Stone repository monitor. Parameters that begin with “SHR_” are read both by the Stone repository monitor and by the first Gem session process to start on a node remote from the Stone, in which case they configure the local shared page cache.

- You may nest files containing configuration parameters by using the INCLUDE directive parameter.

- If a parameter is defined more than once, only the last definition is used (except for the special-purpose INCLUDE parameter).

- Adding an extent at run-time causes the repository monitor to append new configuration statements to the file. Be sure to check the end of a configuration file for possible entries that override earlier ones.

- Most configuration parameters have default values. If you do not specify a setting for the parameter, the default value is used. In many cases the default is used if there is an error in your settings such as an out of range value. To ensure that changes in your setting are actually used, check the log file headers, or set the configuration parameter CONFIG_WARNINGS_FATAL to true.

Example Configuration Settings

The configuration file that is provided in the GemStone distribution, in $GEMSTONE/data/system.conf, provides default values that are suitable as an initial configuration, for small systems.

This section describes possible sets of configuration parameter setting changes that may be useful for larger systems. These provides a starting point; the actual values will need to be adjusted for your particular hardware and application requirements. They give some sense of how some of the more important configuration parameters scale relative to each other.

Large systems will almost certainly require additional tuning for optimal performance. GemStone Professional Services can provide expert assistance in establishing your configuration, tuning configurations for performance and to accommodate growth.

More information about these settings is provided in the detailed instructions for establishing your own configuration, under How To Establish Your Configuration. For details on the specific configuration parameters, see Appendix A.

Gemservers for Large Configurations

Depending on your hardware, there is an upper limit to the number of processes that you can run before performance becomes unacceptable. For very large configurations, it may be useful to establish a separate Gem server machine with a remote shared page cache set up specifically to run Gem sessions, with a high bandwidth connection between the repository server and the Gem server.

|

a Depends on the value of GEM_TEMPOBJ_CACHE_SIZE (default=50 MB but a larger setting is often required). |

|||

Recommendations About Disk Usage

You can enhance server performance by distributing the repository files on multiple disk drives. If you are using a SAN or a disk using some RAID configurations, or have configured multiple logical disks on the same physical spindle, the follow discussion is not entirely applicable.

Why Use Multiple Drives?

Efficient access to GemStone repository files requires that the server node have at least three disk drives (that is, three separate spindles or physical volumes) to reduce I/O contention. For instance:

- One disk for swap space and the operating system (GemStone executables can also reside here).

- One disk for the repository extent, perhaps with a lightly accessed file system sharing the drive.

- One disk for transaction logs and possibly user file systems if they are only lightly used for non-GemStone purposes.

When developing your own configuration, bear in mind the following guidelines:

1. Keep extents and transaction logs separate from operating system swap space. Don’t place either extents or logs on a disk that contains a swap partition; doing so drastically reduces performance.

2. Place the transaction logs on a disk that does not contain extents. Placing logs on a different disk from extents increases the transaction rate for updates while reducing the impact of updates on query performance. You can place multiple logs on the same disk, since only one log file is active at a time.

3. To benefit from multiple extents on multiple disks, you must use weighted allocation mode. If you use sequential allocation, multiple extents provide little benefit. For details about weighted allocation, see Allocating Data to Multiple Extents.

When to Use Raw Partitions

Each raw partition (sometimes called a raw device or raw logical device) is like a single large sequential file, with one extent or one transaction log per partition. The use of raw disk partitions can yield better performance, depending on how they are used and the balancing of system resources.

Placing transaction logs on raw disk partitions is likely to yield better performance.

Usually, placing extents on file systems is as fast as using raw disk partitions. It is possible for this to yield better performance, if doing so reduces swapping; but it is not recommended to use configurations in which swapping occurs. Sufficient RAM should be made available for file system buffers and the shared page cache.

The use of raw partitions for transaction logs is useful for achieving the highest transaction rates in an update-intensive application because such applications primarily are writing sequentially to the active transaction log. Using raw partitions can improve the maximum achievable rate by avoiding the extra file system operations necessary to ensure that each log entry is recorded on the disk. Transaction logs use sequential access exclusively, so the devices can be optimized for that access.

Because each partition holds a single log or extent, if you place transaction logs in raw partitions, you must provide at least two such partitions so that GemStone can preserve one log when switching to the next. If your application has a high transaction volume, you are likely to find that increasing the number of log partitions makes the task of archiving the logs easier.

For information about using raw partitions, see How To Set Up a Raw Partition.

Developing a Failover Strategy

In choosing a failover strategy, consider the following needs:

- Applications that cannot tolerate the loss of committed transactions should mirror the transaction logs (using OS-level tools) and use full transaction logging. A mirrored transaction log on another device allows GemStone to recover from a read failure when it starts up after an unexpected shutdown. Full logging mode allows transactions to be rolled forward from a GemStone backup to recover from the loss of an extent without data loss.

- Applications that require rapid recovery from the loss of an extent (that is, without the delay of restoring from a backup) may wish to replicate all extents on other devices through hardware means, in addition to mirroring transaction logs. Restoring a large repository (many GB) from a backup may take a significant time.

- Setting up a warm or hot standby system can allow fast failover. See Warm and Hot Standbys.

1.2 How To Establish Your Configuration

Configuring the GemStone object server involves the following steps:

1. Gather application specifics about the size of the repository and the number of sessions that will be logged in simultaneously.

2. Plan the operating system resources that will be needed: memory and swap (page) space.

3. Set the size of the GemStone shared page cache and the number of sessions to be supported.

4. Configure the repository extents.

5. Configure the transaction logs.

6. Set GemStone file permissions to allow necessary access while providing adequate security.

Gathering Application Information

When you begin configuring GemStone, be sure to have the following information at hand:

- The number of simultaneous sessions that will be logged in to the repository (in some applications, each user can have more than one session logged in).

- The approximate size of your repository. It’s also helpful, but not essential, to know the approximate number of objects in the repository.

This information is central to the sizing decisions that you must make.

Planning Operating System Resources

GemStone needs adequate memory and swap space to run efficiently. It also needs adequate kernel resources—for instance, kernel parameters can limit the size of the shared page cache or the number of sessions that can connect to it.

Estimating Server Memory Needs

The amount of memory required to run your GemStone server depends mostly on the size of the repository and the number of users who will be logged in to active GemStone sessions at one time. These needs are in addition to the memory required for the operating system and other software.

- The Stone and related processes need memory as shown in the configurations in Table 1.1. Note that this amount of memory is only for the server processes.

- The shared page cache should be increased in proportion to the overall size of your repository. Typically it should be at least 10% of the repository size to provide adequate performance, and the larger the better. The best performance is when the entire repository can fit into memory and cache warming is used to load all pages into memory.

On a node that is dedicated to running GemStone, we recommend in general that you allocate approximately one-third to one-half of your total system RAM to the shared page cache. If it is not a dedicated node, you may need to reduce the size to avoid swapping.

- Each Gem session process needs at least 30 MB of memory on the node where it runs – more may be needed, depending on the setting for GEM_TEMPOBJ_CACHE_SIZE. (See the discussion of memory needs for session processes here.) Each Gem process that runs on a remote (client) node also needs about 0.25 MB on the server node for a GemStone page server process that accesses the repository extents.

Estimating Server Swap Space Needs

To provide reasonable flexibility, the total swap space on your system (sometimes called page space) in general should be at least equal to the system RAM. Preferably, swap space should be twice as much as system RAM. For example, a system with 4 GB of RAM should have at least 4 GB of swap space. The command to find out how much swap space is available (swap, swapinfo, pstat, or lsvg) depends on your operating system. Your GemStone/S 64 Bit Installation Guide contains an example for your platform.

Swap space should not be on a disk drive that contains any of the GemStone repository extent files. In particular, do not use operating system utilities like swap or swapon to place part of the swap space on a disk that also contains the GemStone extents or transaction logs.

If you want to determine the additional swap space needed just for GemStone, use the memory requirements derived in the preceding section, including space for the number of sessions you expect. These figures will approximate GemStone’s needs beyond the swap requirement for UNIX and other software such as the X Window System.

Estimating Server File Descriptor Needs

When they start, most GemStone processes attempt to raise their file descriptor limit from the default (soft) limit to the hard limit set by the operating system. In the case of the Stone repository monitor, the processes that raise the limit this way are the Stone itself and two of its child processes, the AIO page server and the Admin Gem. The Stone uses file descriptors this way:

9 for stdin, stdout, stderr, and internal communication

2 for each user session that logs in

1 for each local extent or transaction log within a file system

2 for each extent or transaction log that is a raw partition

1 for each extent or transaction log that is on a remote node

You can cause the above processes to set a limit less than the system hard limit by setting the GEMSTONE_MAX_FD environment variable to a positive integer. A value of 0 disables attempts to change the default limit.

The shared page cache monitor always attempts to raise its file descriptor limit to equal its maximum number of clients plus five for stdin, stdout, stderr, and internal communication. The maximum number of clients is set by the SHR_PAGE_CACHE_NUM_PROCS configuration option, which is normally computed by the STN_MAX_SESSIONS configuration option.

Reviewing Kernel Tunable Parameters

Operating system kernel parameters limit the interprocess communication resources that GemStone can obtain. It’s helpful to know what the existing limits are so that you can either stay within them or plan to raise the kernel limits. There are four parameters of primary interest:

- The maximum size of a shared memory segment (typically shmmax or a similar name) limits the size of the shared page cache for each repository monitor.

For information about platform-specific limitations on the size of the shared page cache, refer the GemStone/S 64 Bit Installation Guide for your platform.

- The maximum number of semaphores per semaphore id limits the number of sessions that can connect to the shared page cache, because each session uses two semaphores. (Typically this parameter is semmsl or a similar name, although it is not tunable under all operating systems.)

- The maximum number of users allowed on the system (typically maxusers or a similar name) can limit the number of logins and sometimes also is used as a variable in the allocation of other kernel resources by formula. In the latter case, you may need to set it somewhat larger than the actual number of users.

- The hard limit set for the number of file descriptors can limit the total number of logins and repository extents, as described previously.

How you determine the existing limits depends on your operating system. If the information is not readily available, proceed anyway. A later step shows how to verify that the shared memory and semaphore limits are adequate for the GemStone configuration you chose.

Checking the System Clock

The system clock should be set to the correct time. When GemStone opens the repository at startup, it compares the current system time with the recorded checkpoint times as part of a consistency check. A system time earlier than the time at which the last checkpoint was written may be taken as an indication of corrupted data and prevent GemStone from starting. The time comparisons use GMT. It is not necessary to adjust GemStone for changes to and from daylight savings time.

/opt/gemstone/locks and /opt/gemstone/log

GemStone requires access to two directories under /opt/gemstone/:

- /opt/gemstone/locks is used for lock files, which among other things provide the names, ports, and other important data used in interprocess communication and reported by gslist.

Under normal circumstances, you should never have to directly access files in this directory. To clear out lock files of processes that exited abnormally, use gslist -c.

- /opt/gemstone/log is the default location for NetLDI log files, if startnetldi does not explicitly specify a location using the -l option.

If /opt/gemstone/ does not exist, GemStone may use /usr/gemstone/ instead.

Alternatively, you can use the environment variable GEMSTONE_GLOBAL_DIR to specify a different location. Since the files in this location control visibility of GemStone processes to one another, all GemStone processes that interact must use the same directory.

Host Identifier

/opt/gemstone/locks (or an alternate directory, as described above) is also the location for a file named gemstone.hostid, which contains the unique host identifier for this host. This file is created by the first GemStone process on that host to require a unique identifier, by reading eight bytes from /dev/random. This unique hostId is used instead of host name or IP address for GemStone inter-process communication, avoiding issues with multi-homed hosts and changing IP address.

You can access the host identifier for the machine hosting the gem session using the method System class >> hostId.

GemStone shared libraries

Your GemStone installation includes shared library files as well as executables. Access to these shared library files is required for the GemStone executables. In the standard installation of the GemStone software, these shared libraries are located in the $GEMSTONE/lib and $GEMSTONE/lib32 directories.

For installations that do not include a full server, such as remote nodes running gems, or GemBuilder clients, these libraries may be put in a directory other than this standard. See the Installation Guide for more information.

To Set the Page Cache Options and the Number of Sessions

You will configure the shared page cache and the Stone’s private page cache according to the size of the repository and the number of sessions that will connect to it simultaneously. Then use a GemStone utility to verify that the OS kernel will support this configuration.

Shared Page Cache

The GemStone shared page cache system consists of two parts: the shared page cache itself, a shared memory segment; and a monitor process, the shared page cache monitor (shrpcmonitor). Figure 1.2 shows the connections between these two and the main GemStone components when GemStone runs on a single node.

The shared page cache resides in a segment of the operating system’s virtual memory that is available to any authorized process. When the Stone or a Gem session process needs to access an object in the repository, it first checks to see whether the page containing that object is already in the cache. If the page is already present, the process reads the object directly from shared memory. If the page is not present, the process reads the page from the disk into the cache, where all of its objects also become available to other processes.

The name of the shared page cache monitor is derived from the name of the Stone repository monitor and a host-specific ID; for instance, gs64stone~d7e2174792b1f787. This host-specific ID is created by the first GemStone process to use a particular host, and remains the same for anything running on that host.

Each Stone has a single shared page cache on its own node, and may have remote page caches on other nodes in distributed configurations (discussed in detail in Chapter 3). The Stone spawns the shared page cache monitor automatically during startup, and the shared page cache monitor creates the shared memory region and allocates the semaphores for the system. All sessions that log into this Stone connect to this shared page cache and monitor process.

Estimating the Size of the Shared Page Cache

The goal in sizing the shared page cache is to make it large enough to hold the application’s working set of objects, to avoid the performance overhead of having to read pages from the disk extents. In addition, the pages that make up the object table, which holds pointers to the data pages, need to fit in the shared page cache. At the same time, there should be sufficient extra memory available over and above what the shared page cache requires, to allow for other memory caches, heap space, etc. In general, the shared page cache should be configured at no more than 75% of the available memory, though the actual limit depends on factors such as Gem temporary object cache size and other load on the system.

- For maximum performance, the shared page cache can be made large enough to hold the entire repository. After cache warming, sessions should always find the objects in the cache, and never have to perform page reads.

- Configuring the shared page cache as large as possible up to the size of the repository and about 75% of system RAM is recommended for the best performance.

- A shared page cache size of less than 10% of your repository size is likely to impact performance, depending on your application details.

See Example Configuration Settings for possible starting points.

Ultimately, the cache size needed depends on the working set size (the number of objects and transactional views) that need to be maintained in the cache to provide adequate performance. Once your application is running, you can refine your estimate of the optimal cache size by monitoring the free space, and use this to tune your configuration. See Monitoring Performance; some relevant statistics are NumberOfFreeFrames, FramesFromFreeList, and FramesFromFindFree.

For example, to set the size of the shared page cache to 1.5 GB:

SHR_PAGE_CACHE_SIZE_KB = 1500 MB;

Verifying OS support for sufficient Shared Memory

Step 1. Ensure that your OS is configured to allow large shared memory segments, as described in the Installation Guide for your platform.

Step 2. The shared page cache sizing depends in part on the maximum number of sessions that will be logged in simultaneously. Ensure that the STN_MAX_SESSIONS configuration option is set correctly for your application requirements.

Step 3. Use GemStone’s shmem utility to verify that your OS kernel supports the chosen cache size and number of processes. The command line is

$GEMSTONE/install/shmem existingFile cacheSizeKB numProcs

- $GEMSTONE is the directory where the GemStone software is installed.

- existingFile is the name of any writable file, which is used to obtain an id (the file is not altered).

- cacheSizeKB is the SHR_PAGE_CACHE_SIZE_KB setting.

- numProcs is the value calculated for SHR_PAGE_CACHE_NUM_PROCS.

This is reported in the Stone log file, in an existing system. It is computed by STN_MAX_SESSIONS + STN_MAX_GC_RECLAIM_SESSIONS + SHR_NUM_FREE_FRAME_SERVERS + STN_NUM_LOCAL_AIO_SERVERS + 8.

For instance, for a 1.5 GB shared cache and numProcs calculated using all default configuration settings:

% touch /tmp/shmem

% $GEMSTONE/install/shmem /tmp/shmem 1500000 52

% rm /tmp/shmem

If shmem is successful in obtaining a shared memory segment of sufficient size, no message is printed. Otherwise, diagnostic output will help you identify the kernel parameter that needs tuning. The total shared memory requested includes cache overhead for cache space and per-session overhead. The actual shared memory segment in this example would be 104865792 bytes (your system might produce slightly different results).

Stone’s Private Page Cache

As the Stone repository monitor allocates resources to each session, it stores the information in its private page cache. The size of this cache is set by the STN_PRIVATE_PAGE_CACHE_KB configuration option. The default size of 2 MB is sufficient in most circumstances. If you think you might need to adjust this setting, contact GemStone Technical Support.

Diagnostics

The shared page cache monitor creates or appends to a log file, gemStoneNamePIDpcmon.log, in the same directory as the log for the Stone repository monitor. The PID portion of the name is the monitor’s process id. In case of difficulty, check for this log.

The operating system kernel must be configured appropriately on each node running a shared page cache. If startstone or a remote login fails because the shared cache cannot be attached, check gemStoneName.log and gemStoneNamePidpcmon.log for detailed information.

The following configuration settings are checked at startup:

- The kernel shared memory resources must be enabled and sufficient to provide the page space specified by SHR_PAGE_CACHE_SIZE_KB plus the cache overhead.

The kernel semaphore resources must also be sufficient to provide an array of size (SHR_PAGE_CACHE_NUM_PROCS *2) + 1 semaphores.

Use the shmem utility to test the settings (see Step 3). If multiple Stones are being run concurrently on the same node, each Stone requires a separate set of semaphores and separate semaphore id.

- Sufficient file descriptors must be available at startup to provide one descriptor for each of the SHR_PAGE_CACHE_NUM_PROCS processes plus an overhead of five. Compare the value computed by SHR_PAGE_CACHE_NUM_PROCS configuration setting to the operating system file descriptor limit per process. Some operating systems report the descriptor limit in response to the Bash built-in command ulimit -a.

On operating systems that permit it, the shared page cache monitor attempts to raise the descriptor soft limit to the number required. In some cases, raising the limit may require superuser action to raise the hard limit or to reconfigure the kernel.

To Configure the Repository Extents

Configuring the repository extents involves these primary considerations:

Estimating Extent Size

When you estimate the size of the repository, allow 10 to 20% for fragmentation. Also allow at least 0.5 MB of free space for each session that will be logged in simultaneously. In addition, while the application is running, overhead is needed for objects that are created or that have been dereferenced but are not yet removed from the extents. The amount of extent space required for this depends strongly on the particular application and how it is used.

Reclaim operations and sessions with long transactions may also require a potentially much larger amount of extent free space for temporary data. To avoid the risk of out of space conditions, it is recommended to allow a generous amount of free space.

If there is room on the physical disks, and the extents are not at their maximum sizes as specified using DBF_EXTENT_SIZES, then the extents will grow automatically when additional space is needed.

The extent sizes and limits that the system uses are always in multiples of 16MB; using a number that is not a multiple of 16MB results in the next smallest multiple of 16MB being actually used.

Example 1.1 Extent Size Including Working Space

Size of repository = 10 GB

Free-Space Allowance

.5 MB * 20 sessions = 10 MB

Fragmentation Allowance

10 GB * 15% = 1500 MB

Total with Working Space = 11.6 GB

If the free space in extents falls below a level set by the STN_FREE_SPACE_THRESHOLD configuration option, the Stone takes a number of steps to avoid shutting down. For information, see Recovering from Disk-Full Conditions. The default setting for STN_FREE_SPACE_THRESHOLD is 5 MB, or 0.1% of the repository size, whichever is greater.

For planning purposes, you should allow additional disk space for making GemStone backups and for making a copy of the repository when upgrading to a new release. A GemStone full backup may occupy 75% to 90% of the total size of the extents, depending on how much space is free in the repository at the time.

Choosing the Extent Location

You should consider the following factors when deciding where to place the extents:

- Keep extents on a spindle different from operating system swap space.

- Where possible, keep the extents and transaction logs on separate spindles.

Specify the location of each extent in the configuration file. The following example uses two raw disk partitions (your partition names will be different):

DBF_EXTENT_NAMES = /dev/rdsk/c1t3d0s5, /dev/rdsk/c2t2d0s6;

Extent disk configuration

Extents benefit from efficiency of both random access (16 KB repository pages) and sequential access. Don’t optimize one by compromising the other. Sequential access is important for such operations as garbage collection and making or restoring backups. Use of RAID devices or striped file systems that cannot efficiently support both random and sequential access may reduce overall performance. Simple disk mirroring may be give better results.

Setting a Maximum Size for an Extent

You can specify a maximum size in MB for each extent through the DBF_EXTENT_SIZES configuration option. When the extent reaches that size, GemStone stops allocating space in it. If no size is specified, which is the default, GemStone continues to allocate space for the extent until the file system or raw partition is full.

NOTE

For best performance using raw partitions, the maximum size should be 16MB smaller than the size of the partition, so that GemStone can avoid having to handle system errors. For example, for a 2 GB partition, set the size to 1984 MB.

Each size entry is for the corresponding entry in the DBF_EXTENT_NAMES configuration option Use a comma to mark the position of an extent for which you do not want to specify a limit. For example, the following settings are for two extents of 500 MB each in raw partitions.

DBF_EXTENT_NAMES = /dev/rdsk/c1t3d0s5, /dev/rdsk/c2t2d0s6;

DBF_EXTENT_SIZES = 484MB, 484MB;

Pregrowing Extents to a Fixed Size

Allocating disk space requires a system call that introduces run time overhead. Each time an extent is expanded (Figure 1.3), your application must incur this overhead and then initialize the added extent pages.

Figure 1.3 Growing an Extent on Demand

You can increase I/O efficiency while reducing file system fragmentation by instructing GemStone to allocate an extent to a predetermined size (called pregrowing it) at startup.

You can specify a pregrow size for each extent through the DBF_PRE_GROW configuration option. When this is set, the Stone repository monitor allocates the specified amount of disk space when it starts up with an extent that is smaller than the specified size. The extent files can then grow as needed up to the limit of DBF_EXTENT_SIZES, if that is set, or to the limits of disk space.

Pregrowing extents avoids repeated system calls to allocate and initialize additional space incrementally. This technique can be used with any number of extents, and with either raw disk partitions or extents in a file system.

The disadvantages of pregrowing extents are that it takes longer to start up GemStone, and unused disk space allocated to pregrown extents is unavailable for other purposes.

Pregrowing Extents to the Maximum Size

You may pregrow extents to the maximum sizes specified in DBF_EXTENT_SIZES by setting DBF_PRE_GROW to True, rather than to a list of pregrow sizes.

Pregrowing extents to the maximum size provides a simple way to reserve space on a disk for a GemStone extent. Since extents cannot be expanded beyond the maximum specified size, the system should be configured with sufficiently large extent sizes that the limit will not be reached, to avoid running out of space.

Figure 1.4 Pregrowing an Extent

Two configuration options work together to pregrow extents. DBF_PRE_GROW enables the operation, and optionally sets a minimum value to which to size that extent. When DBF_PRE_GROW is set to True, the Stone repository monitor allocates the space specified by DBF_EXTENT_SIZES for each extent, when it creates a new extent or starts with an extent that is smaller than the specified size. It may also be set to a list of sizes, which sets the pregrow size individually for each extent to a value that is smaller than DBF_EXTENT_SIZES.

For example, to pregrow extents to the maximum size of 1 GB each:

DBF_EXTENT_SIZES = 1GB, 1GB, 1GB;

DBF_PRE_GROW = TRUE;

To pregrow extents to 500M, but allow them to later expand to 1 GB if GemStone requires additional space, and that disk space is available:

DBF_EXTENT_SIZES = 1GB, 1GB, 1GB;

DBF_PRE_GROW = 500MB, 500MB, 500MB;

Allocating Data to Multiple Extents

Larger applications may improve performance by dividing the repository into multiple extents. Assuming the extents are on multiple spindles or the disk controller manages files as if they were, this allows several extents to be active at once.

The setting for the DBF_ALLOCATION_MODE configuration option determines whether GemStone allocates new disk pages to multiple extents by filling each extent sequentially or by balancing the extents using a set of weights you specify. Weighted allocation yields better performance because it distributes disk accesses.

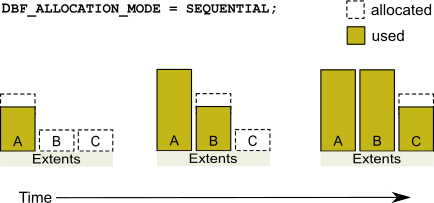

Sequential Allocation

By default, the Stone repository monitor allocates disk resources sequentially by filling one extent to capacity before opening the next extent. (See Figure 1.5) For example, if a logical repository consists of three extents named A, B, and C, then all of the disk resources in A will be allocated before any disk resources from B are used, and so forth. Sequential allocation is used when the DBF_ALLOCATION_MODE configuration option is set to SEQUENTIAL.

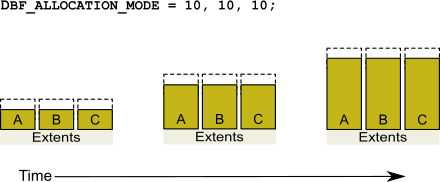

Weighted Allocation

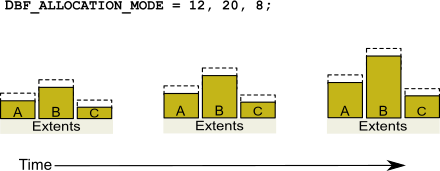

For weighted allocation, you use DBF_ALLOCATION_MODE to specify the number of extent pages to be allocated from each extent on each allocation request. The allocations are positive integers in the range 1..40 (inclusive), with each element corresponding to an extent of DBF_EXTENT_NAMES. For example:

DBF_EXTENT_NAMES = a.dbf, b.dbf, c.dbf;

DBF_ALLOCATION_MODE = 12, 20, 8;

You can think of the total weight of a repository as the sum of the weights of its extents. When the Stone allocates space from the repository, each extent contributes an allocation proportional to its weight.

NOTE

We suggest that you avoid using very small values for weights, such as “1,1,1”. It’s more efficient to allocate a group of pages at once, such as “10,10,10”, than to allocate single pages repeatedly.

One reason for specifying weighted allocation is to share the I/O load among a repository’s extents. For example, you can create three extents with equal weights, as shown in Figure 1.6.

Figure 1.6 Equally Weighted Allocation

Although equal weights are most common, you can adjust the relative extent weights for other reasons, such as to favor a faster disk drive. For example, suppose we have defined three extents: A, B, and C. If we defined their weights to be 12, 20, and 8 respectively, then for every 40 disk units (pages) allocated, 12 would come from A, 20 from B, and 8 from C. Another way of stating this formula is that because B’s weight is 50% of the total repository weight, 50% of all newly-allocated pages are taken from extent B. Figure 1.7 shows the result.

Figure 1.7 Proportionally Weighted Allocation

You can modify the relative extent weights by editing your GemStone configuration file and modifying the values listed for DBF_ALLOCATION_MODE. You can also change DBF_ALLOCATION_MODE to SEQUENTIAL without harming the system. The new values you specify take effect the next time you start the GemStone system.

Effect of Clustering on Allocation Mode

Explicit clustering of objects using instances of ClusterBucket that explicitly specify an extentId takes precedence over DBF_ALLOCATION_MODE. For information about clustering objects, refer to the GemStone/S 64 Bit Programming Guide.

Weighted Allocation for Extents Created at Run Time

Smalltalk methods for creating extents at run time (Repository>> createExtent: and Repository>>createExtent:withMaxSize:) do not provide a way to specify a weight for the newly-created extent. If your repository uses weighted allocation, the Stone repository monitor assigns the new extent a weight that is the simple average of the repository’s existing extents. For instance, if the repository is composed of three extents with weights 6, 10, and 20, the default weight of a newly-created fourth extent would be 12 (36 divided by 3).

To Configure the Transaction Logs

Configuring the transaction logs involves considerations similar to those for extents:

- Choosing a logging mode

- Providing sufficient disk space

- Minimizing I/O contention

- Providing fault tolerance, through the choice of logging mode

Choosing a Logging Mode

GemStone provides two modes of transaction logging:

- Full logging, the default mode, provides real-time incremental backup of the repository. Deployed applications should use this mode. All transactions are logged regardless of their size, and the resulting logs can used in restoring the repository from a GemStone backup.

- Partial logging is intended for use during evaluation or early stages of application development. Partial logging allows a simple operation to run unattended for an extended period and permits automatic recovery from system crashes that do not corrupt the repository. Logs created in this mode cannot be used in restoring the repository from a backup.

CAUTION

The only backups to which you can apply transaction logs are those made while the repository is in full logging mode. If you change to full logging, be sure to make a GemStone backup as soon as circumstances permit.

Changing the logging mode from full to partial logging requires special steps. See To Change to Partial Logging. To re-enable full transaction logging, change the configuration setting STN_TRAN_FULL_LOGGING to True and restart the Stone repository monitor

For general information about the logging mode and the administrative differences, see Logging Modes.

Estimating the Log Size

How much disk space does your application need for transaction logs? The answer depends on several factors:

- The logging mode that you choose

- Characteristics of your transactions

- How often you archive and remove the logs

If you have configured GemStone for full transaction logging (that is, STN_TRAN_FULL_LOGGING is set to True), you must allow sufficient space to log all transactions until you next archive the logs.

CAUTION

If the Stone exhausts the transaction log space, users will be unable to commit transactions until space is made available.

You can estimate the space required from your transaction rate and the number of bytes modified in a typical transaction. Example 1.2 provides an estimate for an application that expects to generate 4500 transactions a day.

At any point, the method Repository>>oldestLogFileIdForRecovery identifies the oldest log file needed for recovery from the most recent checkpoint, if the Stone were to crash. Log files older than the most recent checkpoint (the default maximum interval is 5 minutes) are needed only if it becomes necessary to restore the repository from a backup. Although the older logs can be retrieved from archives, you may want to keep them online until the next GemStone full backup, if you have sufficient disk space.

Example 1.2 Space for Transaction Logs Under Full Logging

Average transaction rate = 5 per minute

Duration of transaction processing = 15 hours per day

Average transaction size = 5 KB

Archiving interval = Daily

Transactions between archives 5 per minute * 60 minutes * 15 hours = 4500

Log space (minimum) 4500 transactions * 5 KB = 22 MB

If GemStone is configured for partial logging, you need only provide enough space to maintain transaction logs since the last repository checkpoint. Ordinarily, two log files are sufficient: the current log and the immediately previous log. (In partial logging mode, transaction logs are used only after an unexpected shutdown to recover transactions since the last checkpoint.)

Choosing the Log Location and Size Limit

The considerations in choosing a location for transaction logs are similar to those for extents:

- Keep transaction logs on a different disk than operating system swap space.

- Where possible, keep the extents and transaction logs on separate disks—doing so reduces I/O contention while increasing fault tolerance.

- Because update-intensive applications primarily are writing to the transaction log, storing raw data in a disk partition (rather than in a file system) may yield better performance.

WARNING

Because the transaction logs are needed to recover from a system crash, do NOT place them in directories such as /tmp that are automatically cleared during power-up.

Transaction logs use sequential access exclusively, so the devices can be optimized for that access.

With raw partitions, or when in partial transaction logging mode, GemStone requires at least two log locations (directories or raw partitions) so it can switch to another when the current one is filled. In full transaction mode, logging to transaction logs on the file system, one directory may be used, in which case all transaction logs are created in that directory.

When you set the log locations in the configuration file, you should also check their size limit.

Although the size of 100 MB provided in the default configuration file is adequate in many situations, update-intensive applications should consider a larger size to limit the frequency with which logs are switched. Each switch causes a checkpoint to occur, which can impact performance.

NOTE

For best performance using raw partitions, the size setting should be slightly smaller than the size of the partition so GemStone can avoid having to handle system errors. For example, for a 2 GB partition, set it to 1998 MB.

The following example sets up a log in a 2 GB raw partition and a directory of 100 MB logs in the file system. This setup is a workable compromise when the number of raw partitions is limited. The file system logs give the administrator time to archive the primary log when it is full.

STN_TRAN_LOG_DIRECTORIES = /dev/rdsk/c4d0s2, /user3/tranlogs;

STN_TRAN_LOG_SIZES = 1998, 100;

To Configure Server Response to Gem Fatal Errors

The Stone repository monitor is configurable in its response to a fatal error detected by a Gem session process. If configured to do so, the Stone can halt and dump debug information if it receives notification from a Gem that the Gem process died with a fatal error. By stopping both the Gem and the Stone at this point, the possibility of repository corruption is minimized.

In the default mode, the Stone does not halt if a Gem encounters a fatal error. This is usually preferable for deployed production systems.

During application development, it may be helpful to know exactly what the Stone was doing when the Gem went down. It may in some cases be preferred to absolutely minimize the risk of repository corruption, at the risk of system outage. To configure the Stone to halt when a fatal gem error is encountered, change the following in the Stone’s configuration file:

STN_HALT_ON_FATAL_ERR = TRUE;

To Set File Permissions for the Server

The primary consideration in setting file permissions for the Server is to protect the repository extents. All reads and writes should be done through GemStone repository executables: the executables that run the Stone, Page Servers, and Gems .

For the tightest security, you can have the extents and executables owned by a single UNIX account, using the setuid bit on the executable files, and making the extents writable only by that account. When setuid is set, the processes started from that executable are owned by the owner you specify for the file, regardless of which user actually starts them.

Alternatively, you can make the extents writable by a particular UNIX group and have all users belong to that group. This has the advantage that linked sessions that perform fileouts and other I/O operations will be done using the individual user’s id instead of the single gsadmin account.

Using the Setuid Bit

When all extents and executables are owned and can only be written by a single UNIX account, it provides the strongest security. By setting the setuid bit, the processes started from that executable are owned by the owner you specify for the file.

Table 1.2 shows the recommended file settings. In this table, gsadmin and gsgroup can be any ordinary UNIX account and group (do NOT use the root account for this purpose). The person who starts the Stone must be logged in as gsadmin or have execute permission.

Ownership and permissions for the netldid executable depend on the authentication mode chosen, and are discussed in Chapter 3.

If you are logged in as root when you run the GemStone installation program, it offers to set file protections in the manner described in Table 1.2. To set them manually, do the following as root:

# cd $GEMSTONE/sys

# chown gsadmin gem pgsvr pgsvrmain stoned

# chmod u+s gem pgsvr pgsvrmain stoned

# cd $GEMSTONE/data

# chown gsadmin extent0.dbf

# chmod 600 extent0.dbf

The protection mode for the shared memory segment is set by the configuration parameter SHR_PAGE_CACHE_PERMISSIONS.

You must take similar steps to provide access for repository clients, which are presented in Chapter 2. See To Set Ownership and Permissions for Session Processes.

Alternative: Use Group Write Permission

For sites that prefer not to use the setuid bit, the alternative is to make the extents writable by a particular UNIX group and have all users belong to that group. That group must be the primary group of the person who starts the Stone (that is, the one listed in /etc/passwd). Do the following, where gsgroup is a group of your choice:

% cd $GEMSTONE/data

% chmod 660 extent0.dbf

% chgrp gsgroup extent0.dbf

Sites that run linked sessions may also prefer to use this protection so that fileouts and other I/O operations that do not read or write the repository will be done using the individual user’s id instead of the single gsadmin account.

File Permissions for Files and Directories

GemStone creates log files and other special files in several locations. In a multi-user environment, the protection of these resources must be such that the appropriate file can be created or updated in response to actions by several users.

/opt/gemstone

All users should be able to read files in the directory /opt/gemstone/locks on each node (or an equivalent location, as discussed here). Users who will start a Stone or NetLDI process require read, write and execute access to /opt/gemstone/locks and (if used for logging) /opt/gemstone/log.

system.conf

The Stone must be able to write as well as read its primary configuration file. If certain configuration changes are made while the Stone is running, the Stone updates its configuration file; for example, Repository>>createExtent: updates the configuration file, so that subsequent restart will be correct. By default, this file is $GEMSTONE/data/system.conf. The user who owns the Stone process must have write permission to the configuration file.

How To Set Up a Raw Partition

WARNING

Using raw partitions requires extreme care. Overwriting the wrong partition destroys existing information, which in certain cases can make data on the entire disk inaccessible.

The instructions in this section are incomplete intentionally. You will need to work with your system administrator to locate a partition of suitable size for your extent or transaction log. Consult the system documentation for guidance as necessary.

You can mix file system-based files and raw partitions in the same repository, and you can add a raw partition to existing extents or transaction log locations. The partition reference in /dev must be readable and writable by anyone using the repository, so you should give the entry in /dev the same protection as you would use for the corresponding type of file in the file system.

The first step is to find a partition (raw device) that is available for use. Depending on your operating system, a raw partition may have a name like /dev/rdsk/c1t3d0s5, /dev/rsd2e, or /dev/vg03/rlvol1. Most operating systems have a utility or administrative interface that can assist you in identifying existing partitions; some examples are prtvtoc and vgdisplay. A partition is available if all of the following are true:

- It does not contain the root (/) file system (on some systems, the root volume group).

- It is not on a device that contains swap space.

- Either it does not contain a file system or that file system can be left unmounted and its contents can be overwritten.

- It is not already being used for raw data.

When you select a partition, make sure that any file system tables, such as /etc/vfstab, do not call for it to be mounted at system boot. If necessary, unmount a file system that is currently mounted and edit the system table. Use chmod and chown to set read-write permissions and ownership of the special device file the same way you would protect a repository file in a file system. For example, set the permissions to 600, and set the owner to the GemStone administrator.

If the partition will contain the primary extent (the first or only one listed in DBF_EXTENT_NAMES), initialize it by using the GemStone copydbf utility to copy an existing repository extent to the device. The extent must not be in use when you copy it. If the partition already contains a GemStone file, first use removedbf to mark the partition as being empty.

Partitions for transaction logs do not need to be initialized, nor do secondary extents into which the repository will expand later.

Sample Raw Partition Setup

The following example configures GemStone to use the raw partition /dev/rsd2d as the repository extent.

Step 1. If the raw partition already contains a GemStone file, mark it as being empty. (The copydbf utility will not overwrite an existing repository file.)

% removedbf /dev/rsd2d

Step 2. Use copydbf to install a fresh extent on the raw partition. (If you copy an existing repository, first stop any Stone that is running on it.)

% copydbf $GEMSTONE/bin/extent0.dbf /dev/rsd2d

Step 3. As root, change the ownership and the permission of the partition special device file in /dev to what you ordinarily use for extents in a file system. For instance:

# chown gsAdmin /dev/rsd2d# chmod 600 /dev/rsd2d

You should also consider restricting the execute permission for $GEMSTONE/bin/removedbf and $GEMSTONE/bin/removeextent to further protect your repository. In particular, these executable files should not have the setuid (S) bit set.

Step 4. Edit the Stone’s configuration file to show where the extent is located:

DBF_EXTENT_NAMES = /dev/rsd2d;

Step 5. Use startstone to start the Stone repository monitor in the usual manner.

Changing Between Files and Raw Partitions

This section tells you how to change your configuration by moving existing repository extent files to raw partitions or by moving existing extents in raw partitions to files in a file system. You can make similar changes for transaction logs.

Moving an Extent to a Raw Partition

To move an extent from the file system to a raw partition, do this:

Step 1. Define the raw disk partition device. Its size should be at least 16 MB larger than the existing extent file.

Step 2. Stop the Stone repository monitor.

Step 3. Edit the repository’s configuration file, substituting the device name of the partition for the file name in DBF_EXTENT_NAMES.

Set DBF_EXTENT_SIZES for this extent to be 16 MB smaller than the size of the partition.

Step 4. Use copydbf to copy the extent file to the raw partition. (If the partition previously contained a GemStone file, first use removedbf to mark it as unused.)

Moving an Extent to the File System

The procedure to move an extent from a raw partition to the file system is similar:

Step 1. Stop the Stone repository monitor.

Step 2. Edit the repository’s configuration file, substituting the file pathname for the name of the partition in DBF_EXTENT_NAMES.

Step 3. Use copydbf to copy the extent to a file in a file system, then set the file permissions to the ones you ordinarily use.

Moving Transaction Logging to a Raw Partition

To switch from transaction logging in the file system to logging in a raw partition, do this:

Step 1. Define the raw disk partition. If you plan to copy the current transaction log to the partition, its size should be at least 1 to 2 MB larger than current log file.

Step 2. Stop the Stone repository monitor.

Step 3. Edit the repository’s configuration file, substituting the device name of the partition for the directory name in STN_TRAN_LOG_DIRECTORIES. Make sure that STN_TRAN_LOG_SIZES for this location is 1 to 2 MB smaller than the size of the partition.

Step 4. Use copydbf to copy the current transaction log file to the raw partition. (If the partition previously contained a GemStone file, first use removedbf to mark it as unused.)

You can determine the current log from the last message “Creating a new transaction log” in the Stone’s log. If you don’t copy the current transaction log, the Stone will open a new one with the next sequential fileId, but it may be opened in another location specified by STN_TRAN_LOG_DIRECTORIES.

Moving Transaction Logging to the File System

The procedure to move transaction logging from a raw partition to the file system is similar:

Step 1. Stop the Stone repository monitor.

Step 2. Edit the repository’s configuration file, substituting a directory pathname for the name of the partition in STN_TRAN_LOG_DIRECTORIES.

Step 3. Use copydbf to copy the current transaction log to a file in the specified directory. The copydbf utility will generate a file name like tranlognnn.dbf, where nnn is the internal fileId of that log.

1.3 How To Access the Server Configuration at Run Time

GemStone provides several methods in class System that let you examine, and in certain cases modify, the configuration parameters at run time from Smalltalk.

To Access Current Settings at Run Time

Class methods in System, in the in category Configuration File Access, let you examine the system’s Stone configuration. The following access methods all provide similar server information:

stoneConfigurationReport

Returns a SymbolDictionary whose keys are the names of configuration file parameters, and whose values are the current settings of those parameters in the repository monitor process.

configurationAt: aName

Returns the value of the specified configuration parameter, giving preference to the current session process if the parameter applies to a Gem.

stoneConfigurationAt: aName

Returns the value of the specified configuration parameter from the Stone process, or returns nil if that parameter is not applicable to a Stone.

(The corresponding methods for accessing a session configuration are described here.)

Here is a partial example of the Stone configuration report:

topaz 1> printit

System stoneConfigurationReport asReportString

%

#'StnEpochGcEnabled' false

#'StnPageMgrRemoveMinPages' 40

#'STN_TRAN_LOG_SIZES' 100

#'StnTranLogDebugLevel' 0

...

Keys in mixed capitals and lowercase, such as StnEpochGcEnabled, are internal run-time parameters.

To Change Settings at Run Time

The class method System class>>configurationAt: aName put: aValue lets you change the value of the internal run-time parameters in Table 1.3, if you have the appropriate privileges.

In the reports described in the preceding section, parameters with names in all uppercase are read-only; for parameters that can be changed at runtime, the name is in mixed case.

CAUTION

Avoid changing configuration parameters unless there is a clear reason for doing so. Incorrect settings can have serious adverse effects on performance. For additional guidance about run-time changes to specific parameters, see Appendix A, “GemStone Configuration Options”.

The following example first obtains the value of #StnAdminGcSessionEnabled. This value can be changed at run time by a user with GarbageCollection privilege:

topaz 1> printit

System configurationAt: #StnAdminGcSessionEnabled

%

true

topaz 1> printit

System configurationAt: #StnAdminGcSessionEnabled put: false

%

false

For more information about these methods, see the comments in the image.

1.4 How To Tune Server Performance

There are a number of configuration options by which you can tune the GemStone server. These options can help make better use of the shared page cache, reduce swapping, and control disk activity caused by repository checkpoints.

Tuning the Shared Page Cache

Two configuration options can help you tailor the shared page cache to the needs of your application: SHR_PAGE_CACHE_SIZE_KB and SHR_SPIN_LOCK_COUNT.

You may also want to consider object clustering within Smalltalk as a means of increasing cache efficiency.

Adjusting the Cache Size

Adjust the SHR_PAGE_CACHE_SIZE_KB configuration option according to the total number of objects in the repository and the number accessed at one time. For proper performance, the entire object table should be in shared memory.

In general, the more of your repository you can hold in your cache, the better your performance will be, provided you have enough memory to avoid swapping.

You should review the configuration recommendations given earlier (under Estimating the Size of the Shared Page Cache) in light of your application’s design and usage patterns. Estimates of the number of objects queried or updated are particularly useful in tuning the cache.

You can use the shared page cache statistics for a running application to monitor the load on the cache. In particular, the statistics FreeFrameCount and FramesFromFindFree may be useful, as well as FramesFromFreeList.

Matching Spin Lock Limit to Number of Processors

The setting for the SHR_SPIN_LOCK_COUNT configuration option specifies the number of times a process should attempt to obtain a lock in the shared page cache using the spin lock mechanism before resorting to setting a semaphore and sleeping. We recommend you leave SHR_SPIN_LOCK_COUNT set to –1 (the default), which causes GemStone to determine whether multiple processors are installed and set the parameter accordingly.

Reducing Swapping

Be careful not to make the shared page cache so large that it forces swapping. You should ensure that your system has sufficient RAM to hold the configured shared page cache, with extra space for the other memory requirements.

Controlling Checkpoint Frequency

On each commit, committed changes are immediately written to the transaction logs, but the writing of this data, recorded on "dirty pages," from the shared page cache to the extents may lag behind.

At a checkpoint, all remaining committed changes that have not yet been written to the extents are written out, and the repository updated to a new consistent committed state. If the volume of these waiting committed changes is high, there may be a performance hit as this data is written out. Between checkpoints, new committed changes written to the extents are not yet considered part of the repository's consistent committed state until the next checkpoint.

If checkpoints interferes with other GemStone activity, you may want to adjust their frequency.

- In full transaction logging mode, most checkpoints are determined by the STN_CHECKPOINT_INTERVAL configuration option, which by default is five minutes. A few Smalltalk methods, such as Repository>>fullBackupTo:, force a checkpoint at the time they are invoked. A checkpoint also is performed each time the Stone begins a new transaction log.

- In partial logging mode, checkpoints also are triggered by any transaction that is larger than STN_TRAN_LOG_LIMIT, which sets the size of the largest entry that is to be appended to the transaction log. The default limit is 1 MB of log space. If checkpoints are too frequent in partial logging mode, it may help to raise this limit. Conversely, during bulk loading of data with large transactions, it may be desirable to lower this limit to avoid creating large log files.

A checkpoint also occurs each time the Stone repository monitor is shut down gracefully, as by invoking stopstone or System class>>shutDown. This checkpoint permits the Stone to restart without having to recover from transaction logs. It also permits extent files to be copied in a consistent state.

While less frequent checkpoints may improve performance in some cases, they may extend the time required to recover after an unexpected shutdown. In addition, since checkpoints are important in the recycling of repository space, less frequent checkpoints can mean more demand on free space (extent space) in the repository.

Suspending Checkpoints

You can call the method System class>>suspendCheckpointsForMinutes: to suspend checkpoints for a given number of minutes, or until System class>>resumeCheckpoints is executed. (To execute these Smalltalk methods, you must have the required GemStone privilege, as described in Chapter 6, “User Accounts and Security”.)

Generally, this approach is used only to allow online extent backups to complete. For details on how to suspend and resume checkpoints, see How To Make an Extent Snapshot Backup.

Tuning Page Server Behavior

GemStone uses page servers for three purposes:

- To write dirty pages to disk.

- To add free frames to the free frame list, from which a Gem can take as needed.

- To transfer pages from the Stone host to the shared page cache host, if different.

The AIO page server is a type of page server that performs all three functions. The AIO page server is running at all times, and is required in order to write updated data (dirty pages) to disk. The default configuration starts only one thread within the AIO page server. Larger applications with multiple extents will want to configure a larger number of threads to avoid a performance bottleneck.

In addition, by default, the free frame page server is running. The Free frame page server is dedicated only to the third task listed above: adding free frames to the free list. In some cases, increasing the number of free frame page servers can improve overall system performance. For example, if Gems are performing many operations requiring writing pages to disk, the AIO page server may have to spend all its time writing pages, never getting a chance to add free frames to the free list. Alternatively, if Gems are performing operations that require only reading, the AIO page server will see no dirty frames in the cache—the signal that prompts it to take action. In that case, it may sleep for a second, even though free frames are present in the cache and need to be added to the free list.

Gems that are on a remote host also need a page server to transfer pages to their local shared page cache. There is always one thread per remote Gem.

To Add AIO Page Server threads

By default the Stone spawns a page server process with a single thread on its local node, to perform asynchronous I/O (AIO) between the shared page cache and the extents. This page server is the process that updates extents on the local node during a checkpoint. (In some cases, the Stone may use additional page server threads temporarily during startup to pregrow multiple extents.)

If your configuration has multiple extents on separate disk spindles, you should generally increase the number of threads in the AIO page server. You can do this by changing the STN_NUM_LOCAL_AIO_SERVERS configuration option.

For multiple page server threads to improve performance, they must be able to execute at the same time and write to disk at the same time. If you have only one CPU, or your extents are on a single disk spindle, multiple AIO page server threads will not be able to write pages out faster than a single thread.

Free Frame Page Server

A Gem can get free frames either from the free list (the quick way), or, if sufficient free frames are not listed, by scanning the shared page cache for a free frame instead. (What constitutes sufficient free frames is determined by the GEM_FREE_FRAME_LIMIT configuration option).

To assist the AIO page server in adding frames back to the free list, the stone spawns a free frame page server.

By default, when you start the Stone, the free frame page server process uses the same number of threads as the AIO page server processes. This is strongly recommended so that the distribution of free pages and used pages remains balanced over the repository extents.

Process Free Frame Caches

There is a communication overhead involved in getting free frames from the free frame list for scanning. To optimize this, you can configure the Gems and their remote page servers to add or remove multiple free frames from a free frame cache to the free frame list in a single operation.

When using the free frame cache, the Gem or remote page server removes enough frames from the free list to refill the cache in a single operation. When adding frames to the free list, the process does not add them until the cache is full.

You can control the size of the Gem and remote page server free frame caches by setting the configuration parameters GEM_FREE_FRAME_CACHE_SIZE and GEM_PGSVR_FREE_FRAME_CACHE_SIZE, respectively.

The default behavior depends on the size of the shared page cache; if the shared page cache is 100MB or larger, a page server free frame cache size of 10 is used, so ten free frames are acquired in one operation when the cache is empty. For shared page cache sizes less than 100MB, the Gem or remote page server acquires the frames one at a time.

1.5 How To Run a Second Repository

You can run more than one repository on a single node—for example, separate production and development repositories. There are several points to keep in mind:

- Each repository requires its own Stone repository monitor process, extent files, transaction logs, and configuration file. Each Stone will start its own shared page cache monitor and a set of other processes, as described here.

- Multiple Stones that are running the same version of GemStone can share a single installation directory, provided that you create separate repository extents, transaction logs, and configuration files. If performance is a concern, the first step should be to isolate each Stone’s data directory and the system swap space on separate drives. Then, review the discussion under Recommendations About Disk Usage.

- You must give each Stone a unique name at startup. That name is used to identify the server and to maintain separate log files. Users will connect to the repository by specifying the Stone’s name.

- A single NetLDI serves all Stones and Gem session processes on a given node, provided both Stones are running the same version. If you are running two different versions of GemStone, you will need two NetLDIs.